In an era where digital threats are increasingly sophisticated, safeguarding your personal, financial, or work-related data is more crucial than ever. One effective way to keep your sensitive files secure is by using folder encryption.

Folder encryption allows you to lock your files with a password, ensuring that only authorized users can access them.

In this article, we’ll explore the concept of folder encryption, its importance, and why Best Folder Encryptor is one of the best tools for the job.

What is Folder Encryption?

Folder encryption is the process of securing a folder by converting its contents into an unreadable format that can only be restored to its original state with the correct password. It essentially acts as a lock on your folder, preventing unauthorized access to your sensitive data.

Folder encryption ensures that even if someone gains physical access to your device, they cannot open or view your files without the correct password.

Why is Folder Encryption Important?

In today’s digital age, there are several compelling reasons to encrypt your folders:

1. Prevent Unauthorized Access: Folder encryption ensures that even if someone gains access to your computer or storage device, they cannot open your encrypted folders without the correct password.

2. Protect Sensitive Information: Whether it’s personal data like passwords, financial records, or confidential work documents, encryption keeps your sensitive information safe from leakage.

3. Ensure Privacy: Folder encryption protects your privacy by ensuring that your data is not exposed even if your device is lost or stolen.

4. Compliance with Regulations: Many industries require data protection measures to comply with regulations. Encrypting folders helps meet these requirements.

Best Folder Encryptor: A Comprehensive Solution

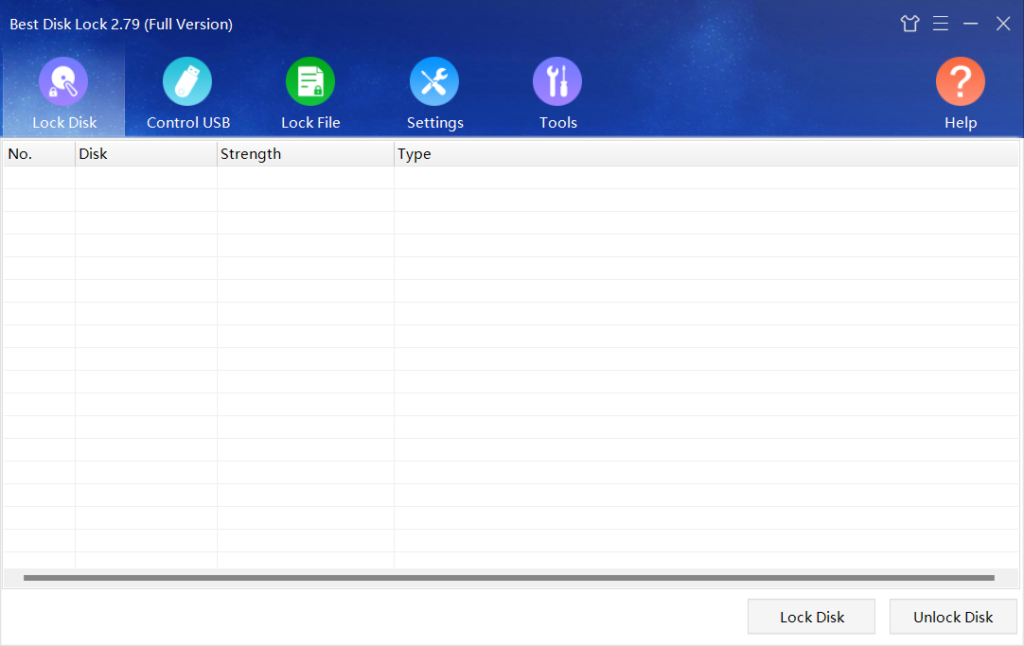

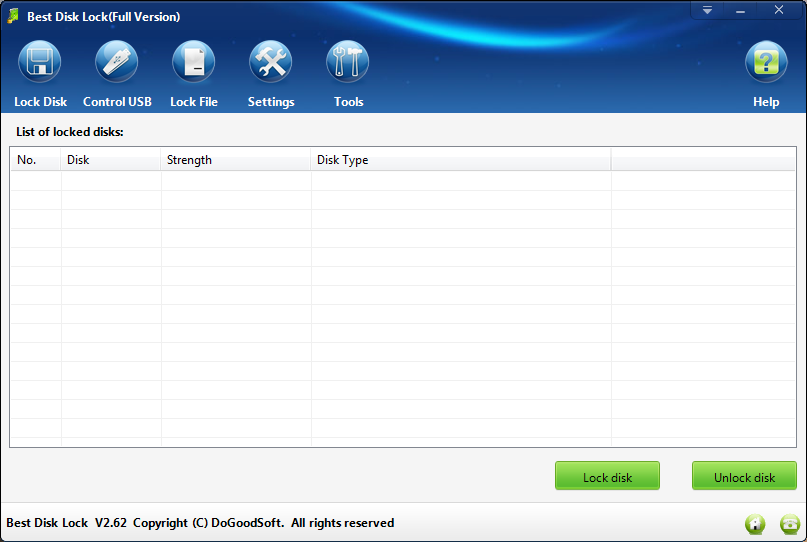

When it comes to folder encryption, Best Folder Encryptor is one of the most reliable and user-friendly tools available. Here’s why:

* Ease of Use: Best Folder Encryptor is designed with both beginners and advanced users in mind. Its intuitive interface makes it easy to encrypt folders with just a few clicks, without requiring technical knowledge.

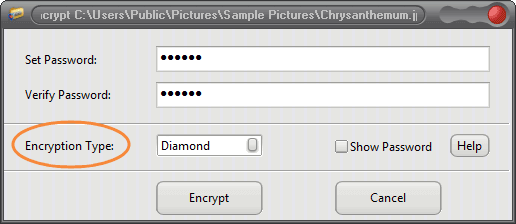

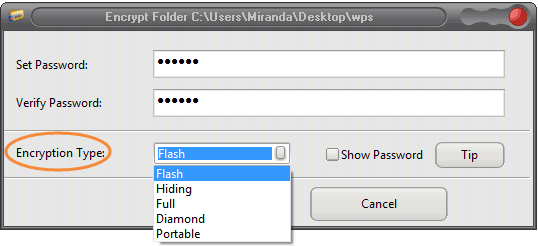

* Strong Encryption Algorithms: Best Folder Encryptor has 5 folder encryption methods, like Diamond-encryption uses advanced encryption standards, ensuring that your data is highly secure.

* Password Protection: You can set a strong password for your encrypted folder, and Best Folder Encryptor ensures that your files cannot be accessed without the correct password.

* Portable Encryption: Portable-encrytpion method allows you to create encrypted folders that can be carried around on external drives or USB sticks. The encryption is portable, meaning you can take your secure files with you wherever you go, without worrying about data breaches.

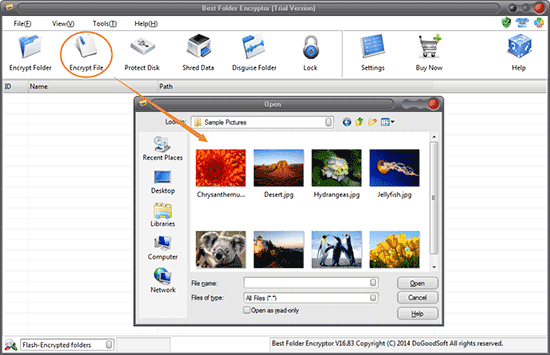

* Multiple Encryption Options: You can choose from a variety of encryption methods based on your needs, whether you want to encrypt a single folder, a file or multiple folders.

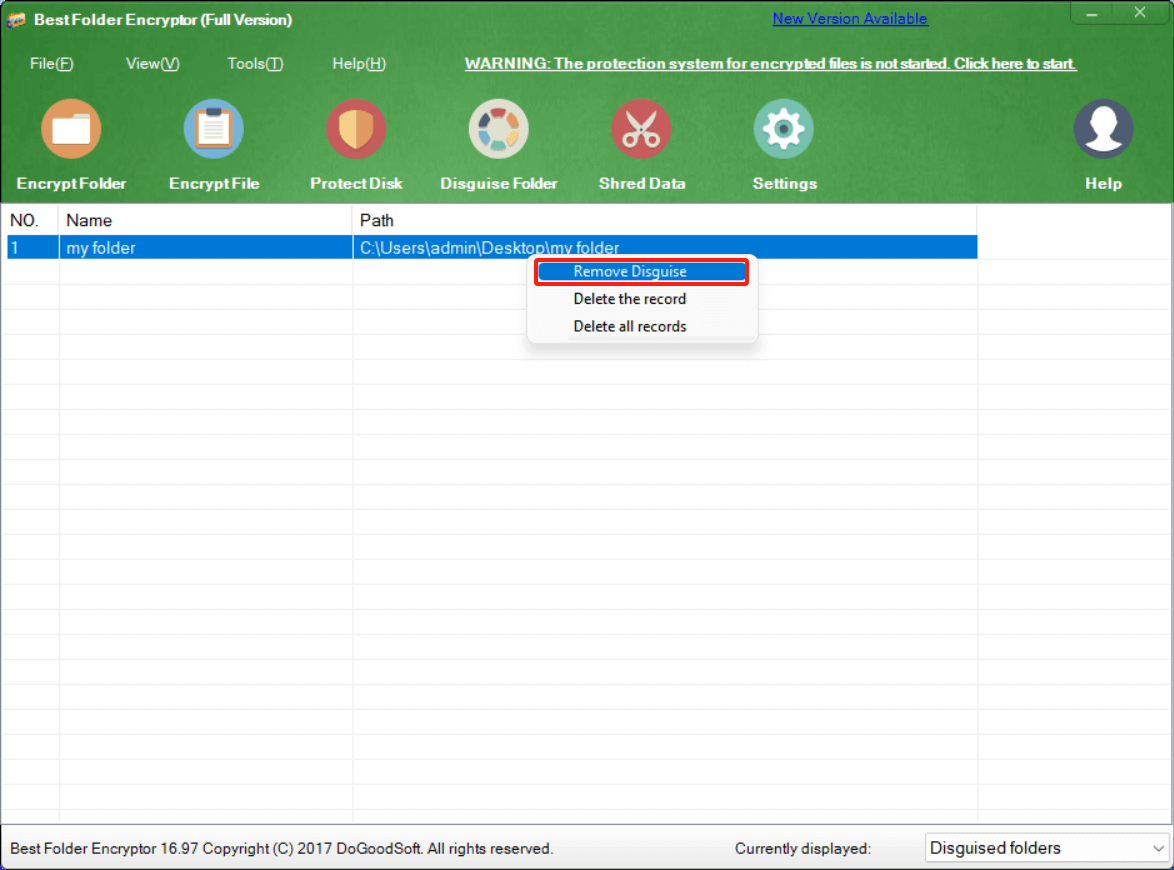

* File Shredding: If you need to securely delete files, Best Folder Encryptor includes a file shredding feature that ensures deleted files cannot be recovered by anyone.

How to Use Best Folder Encryptor

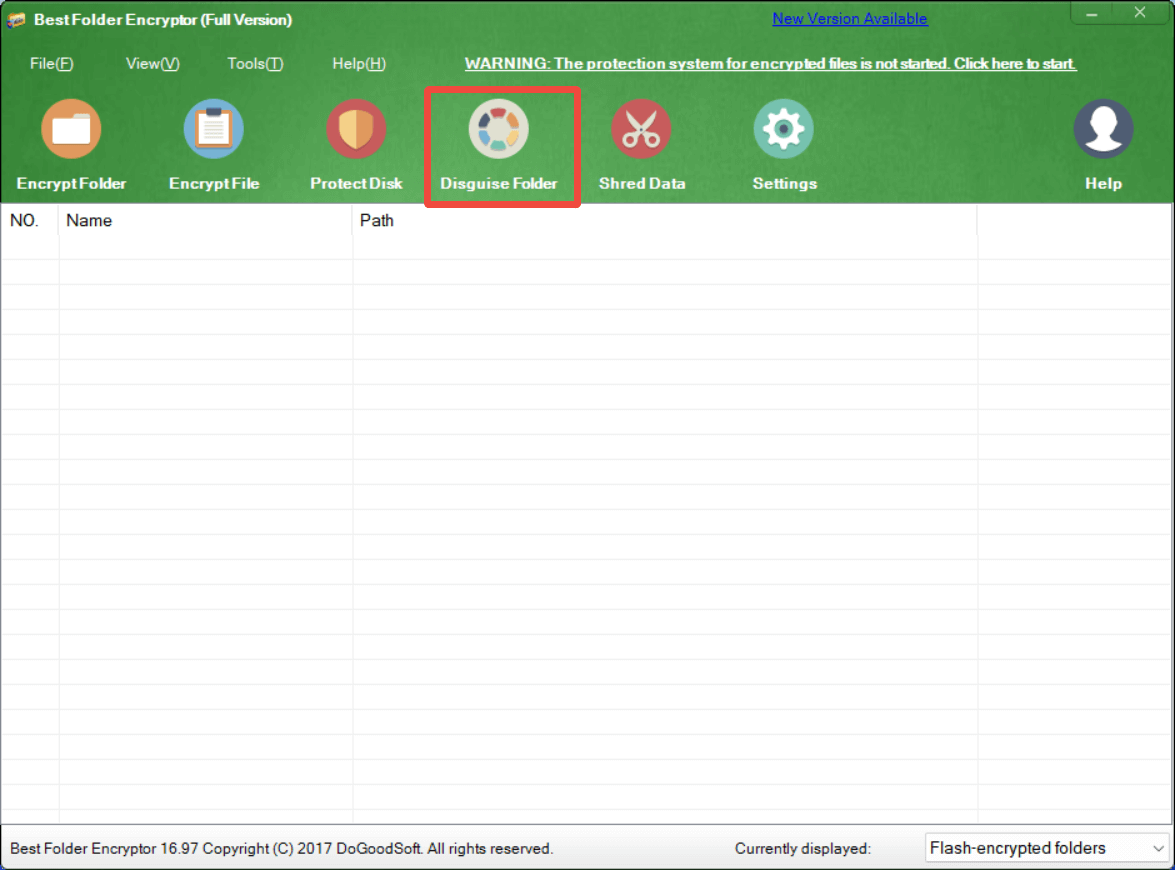

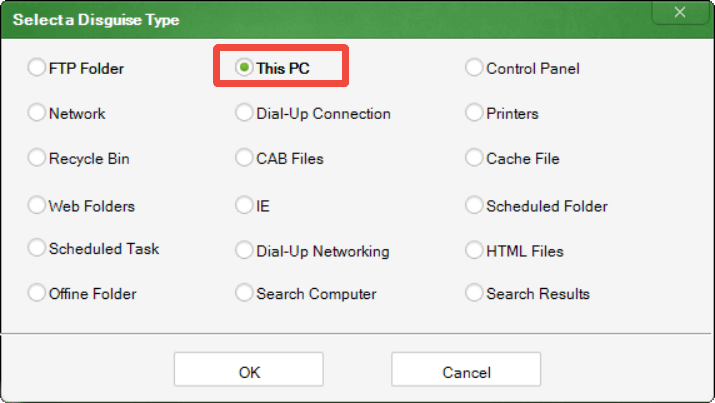

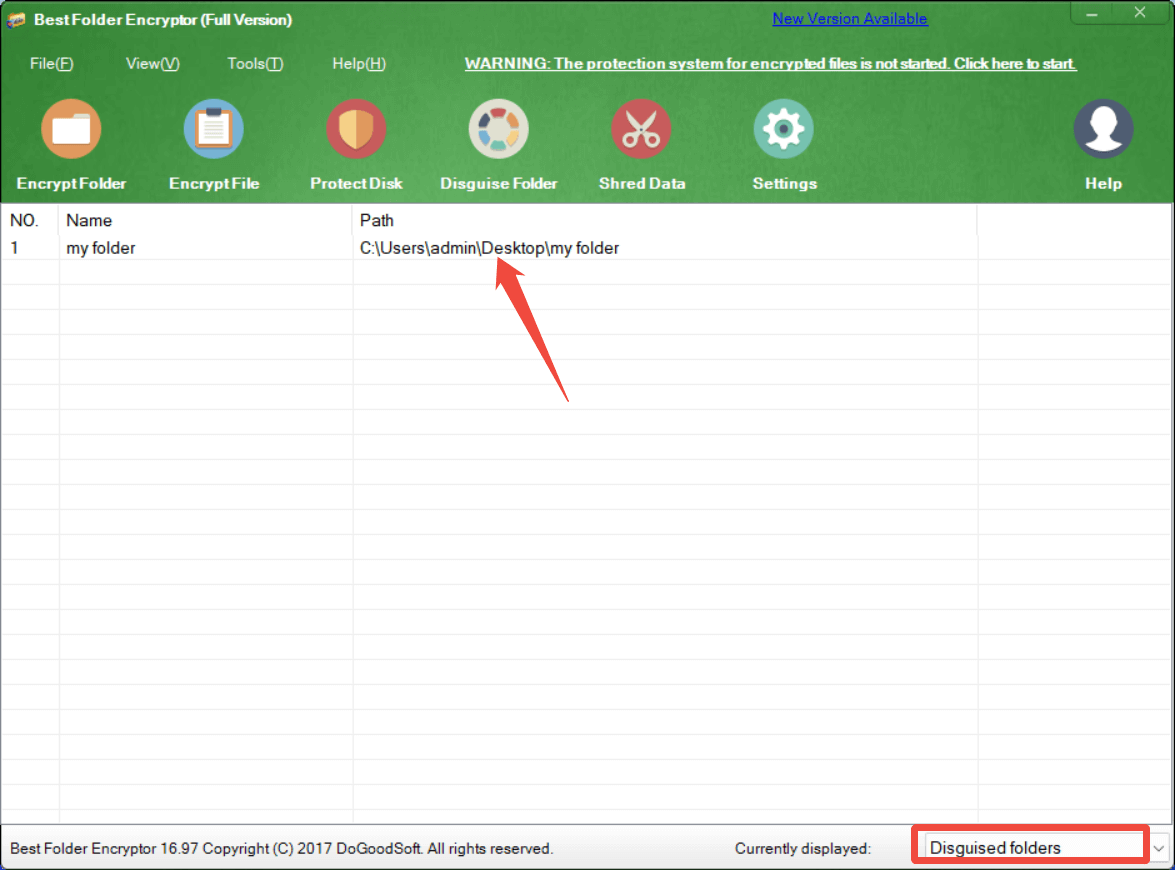

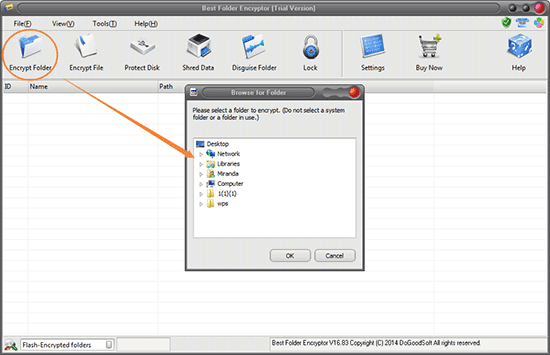

Using Best Folder Encryptor is simple and straightforward:

1. Download and Install: First, download the Best Folder Encryptor software from its official website and install it on your computer.

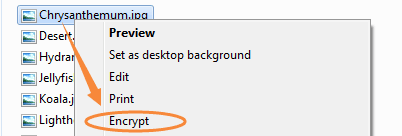

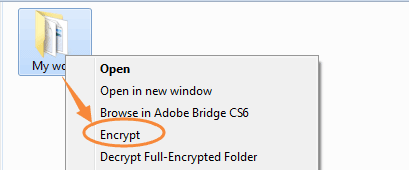

2. Select the Folder: Right-click the folder you want to encrypt and select Encrypt.

3. Set a Strong Password: Create a strong password to protect your folder. Ensure that the password is unique and difficult to guess.

4. Encrypt the Folder: Click on the encryption option to secure your folder. The software will encrypt the folder, making it accessible only with the correct password.

5. Access the Folder: To access your encrypted folder, just open the folder, enter your password, and you can view or modify the contents. When you close the folder, the folder will automatically return to its encrypted state.

Matters Needing Attention

1. Be sure to decrypt the encrypted files/folders first before you back up or move them. Never operate the encrypted folders directly.

2. Please remember the encryption password. For diamond-encryption, full-encryption and portable-encryption, if you forget the password, you will not be able to open and decrypt the folder, and there is no password retrieval function.